Bidirectional Model-Data Provenance

Closing the Loop from Edge to Retraining

A medical diagnostic model runs on 500 edge devices in hospitals. Six months later, the FDA asks which model version produced specific diagnostic results in January. The answer determines recalls, fines, or regulatory approval for the next product.

Can the question be answered in 48 hours with cryptographic proof? Or does it require three weeks cross-referencing deployment logs, S3 timestamps, and spreadsheets—with gaps?

This is the provenance problem. Not just “where did this model come from” but “which data came from which model version, and can you prove it?”

The Real Problem: Two Disconnected Workflows

Traditional ML infrastructure treats model distribution and data collection as separate systems:

Models flow out: CI/CD pipelines, container registries, deployment scripts, version tags.

Data flows in: Custom collectors, S3 buckets, data lakes, ETL pipelines, manual tracking.

The connection between them? Spreadsheets. Naming conventions. Hope.

This breaks when you need to:

Retrain models on data from specific versions

Prove regulatory compliance with audit trails

Debug performance issues across model versions

Correlate field failures with deployment history

The root cause: models and data live in different systems with different identities. A model is medical-diagnostic:v2.1.4. Its training data is s3://bucket/device-042/2025-01-15/batch-003.parquet. There’s no cryptographic link.

The Solution: Bidirectional Artifact Flow

Treat both models and training data as versioned OCI artifacts with cryptographic references between them.

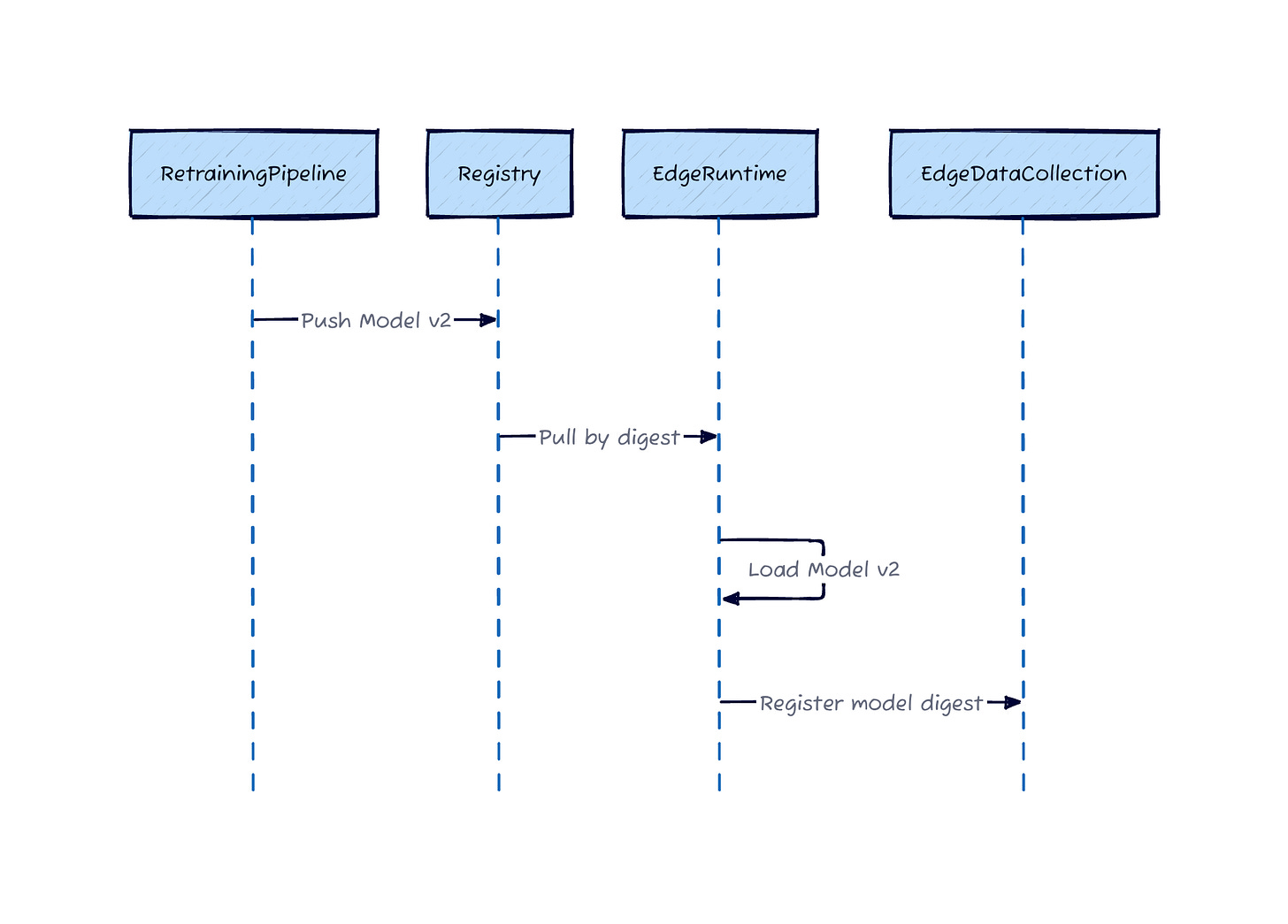

Outbound (Model Distribution): Models package as ModelKits—OCI artifacts containing weights, inference code, and configuration. Edge devices pull by content-addressable digest: sha256:abc123...

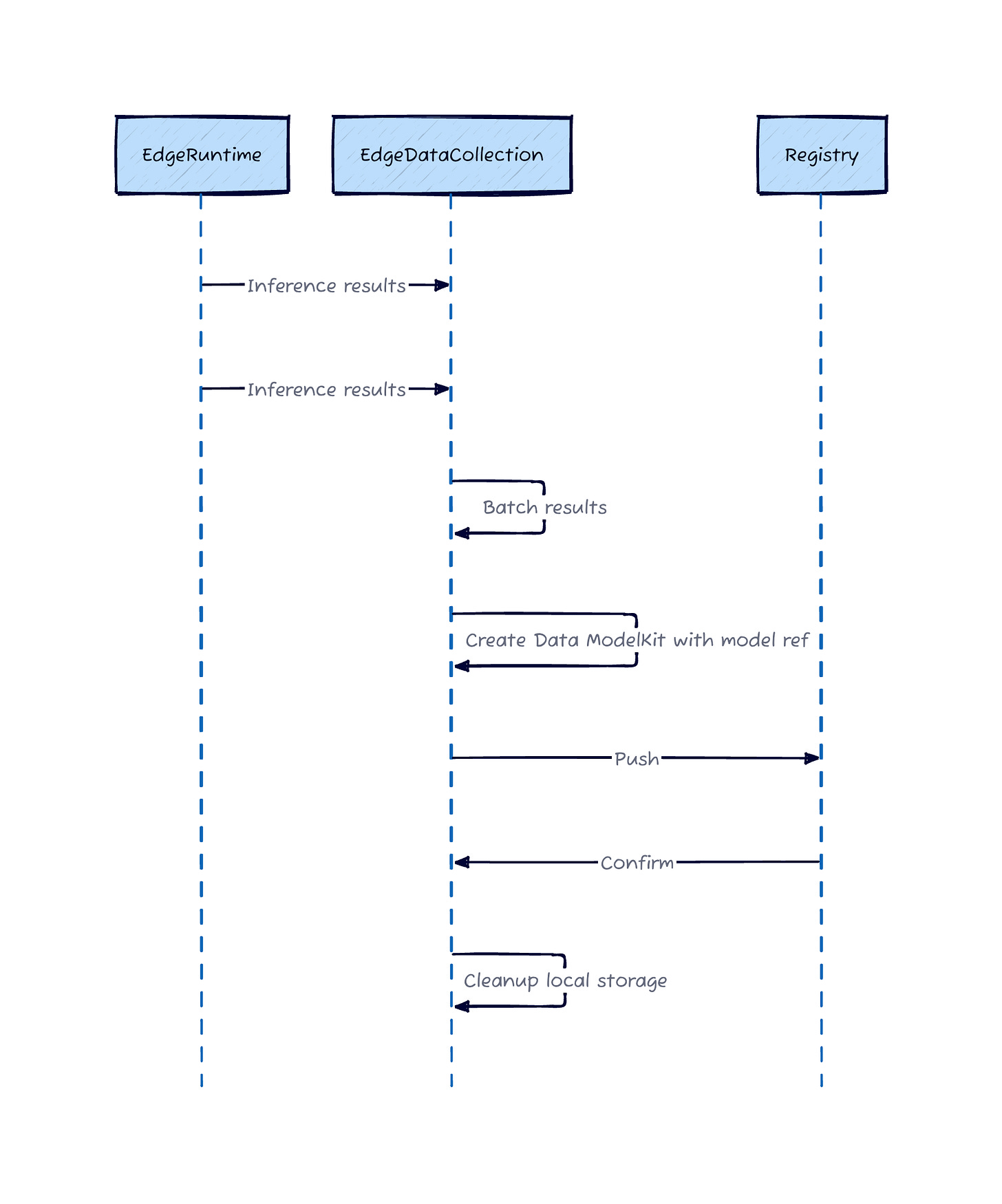

Inbound (Data Collection): Training data packages as separate ModelKits that reference the source model by digest. These Training Data ModelKits contain inference outputs, optional ground truth labels, and metadata (device ID, timestamps, inference parameters).

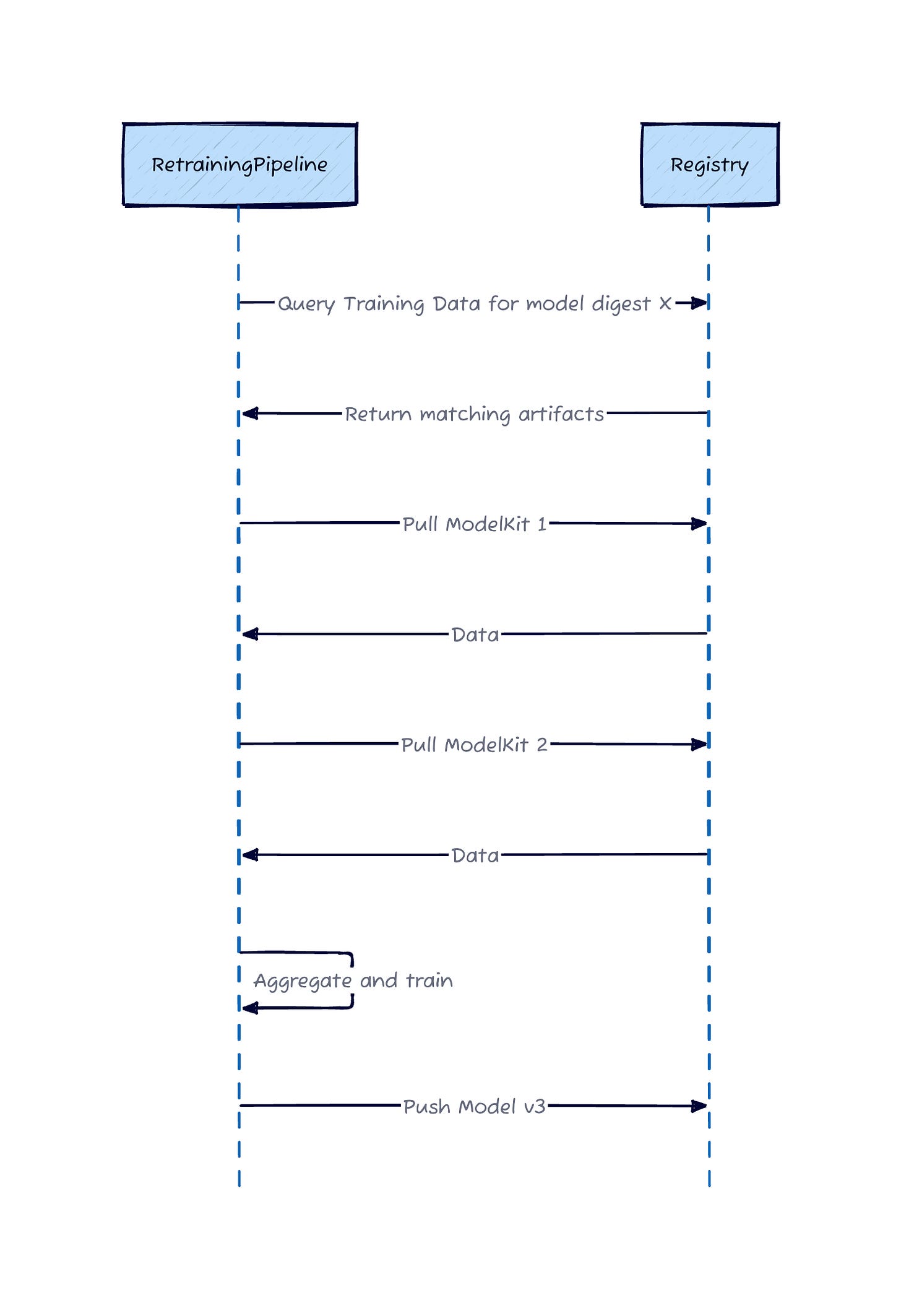

Single Infrastructure: The same OCI registry handles both directions. Models distribute outbound. Training data flows inbound. Query by model digest to aggregate data from specific versions across your entire device fleet.

Cryptographic Chain: Sign and attest both artifact types. The provenance link is mathematically verifiable, not administratively maintained.

How It Works

Model Deployment

Inference and Data Collection

Retraining Query and Aggregation

The beauty: models and data share the same identity system. Query by model digest returns all training data generated by that version across your entire fleet. The provenance is in the artifact structure itself.

This Works Today

The pattern relies on standard OCI infrastructure and existing tools. KitOps handles ModelKit creation and streaming pack-and-push. Any OCI-compliant registry works, though registries with metadata query capabilities (like Jozu Hub) simplify data aggregation. Cosign or Notary provide signing.

The architecture scales with your registry infrastructure. If you’re deploying models to edge devices in regulated environments—automotive, medical devices, industrial control systems—the provenance problem isn’t optional. Auditors will ask which model version produced which results. This architecture answers that question with cryptographic proof instead of spreadsheets.

The bidirectional flow isn’t novel infrastructure. It’s the logical extension of treating ML artifacts the same way we learned to treat application artifacts: versioned, immutable, content-addressable, and cryptographically verifiable.