The State of OCI Artifacts for AI/ML

I’ve spent the last 18 months watching OCI artifacts for AI/ML go from “interesting idea” to production-grade infrastructure in enterprises. The shift is real, but not where most people first expected. It’s not replacing S3 buckets everywhere—it’s becoming the standard for Kubernetes-native ML deployments where governance, provenance, supply chain controls, and container-centric operations matter. Here’s what’s actually happening with OCI for ML as of October 2025.

Momentum is growing

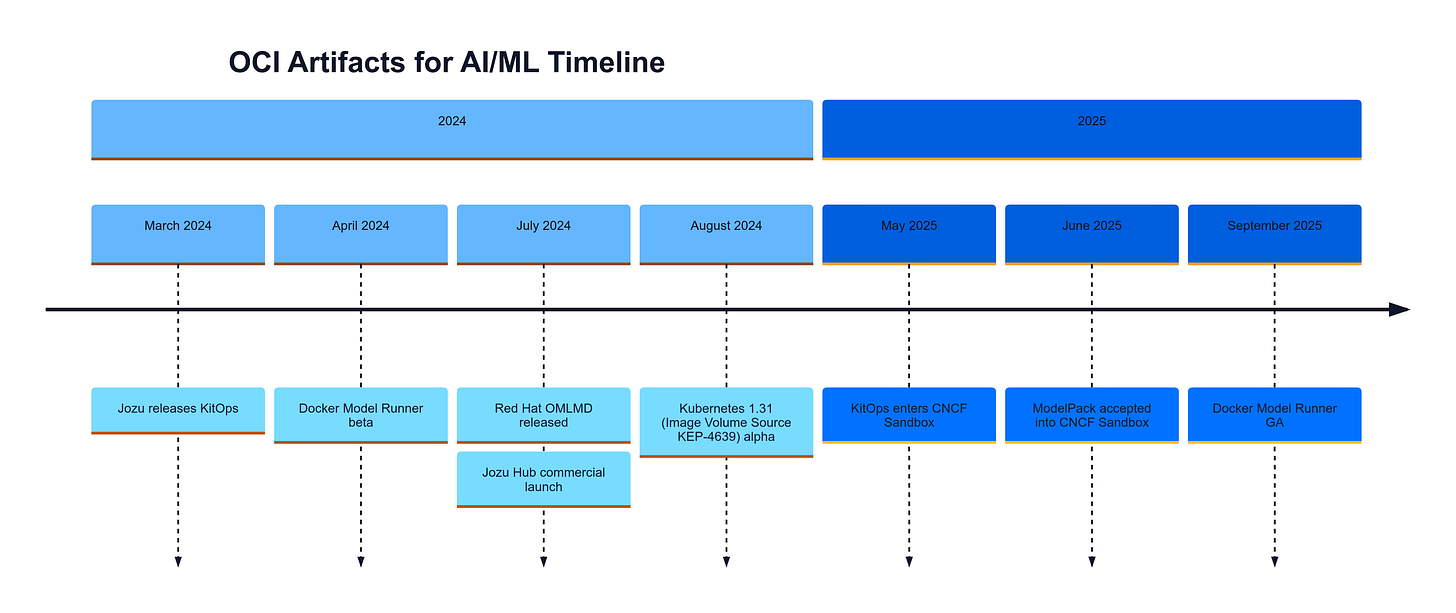

Let me walk through what’s actually happened over the last 18 months, because the timeline tells a story about ecosystem convergence:

The momentum started quietly. When Jozu released KitOps in March 2024, early users responded: “Why didn’t we think of this sooner?” By May 2025 it entered the CNCF Sandbox and has seen over 150K downloads. KitOps offers a Docker-like CLI ( kit pack, kit push, kit pull) plus native Hugging Face integration, letting teams import models into OCI registries with automatic metadata generation.

In June 2025 the CNCF accepted ModelPack into its Sandbox, marking the first vendor-neutral open standard for packaging ML artifacts as OCI objects. Contributors include Jozu, Red Hat, PayPal, ANT Group, and ByteDance—indicating commitment beyond startups. ModelPack builds directly on the OCI Image Manifest Specification v1.1, defining custom media types for models, datasets, code, and documentation as separate deduplicated layers.

Docker shipped Model Runner in beta (April 2025) and reached general availability in September 2025. It introduces an OCI artifact approach for GGUF-format LLMs, treating models as first-class artifacts with domain-specific configuration schemas.

Red Hat’s OMLMD project (July 2024) delivers a Python SDK and CLI for working with ML models and metadata via OCI registries.

Kubernetes integration establishes the foundation

Kubernetes 1.31 (August 2024) introduced Image Volume Source (KEP-4639) in alpha—native support for mounting OCI artifacts as read-only volumes in pods, specifically designed for AI/ML use cases. This eliminates the init-container pattern previously required to fetch models, instead allowing data scientists to package model weights as OCI objects that mount directly alongside model servers. KServe added OCI artifact support and KitOps released its integration with KServe with support of multiple cloud providers.

OCI specifications for AI/ML artifacts have achieved genuine production readiness as of October 2025. Technical implementation is mature with OCI v1.1 providing robust architectural foundations. Security tooling spans ML-specific scanning, signing, attestations, and policy enforcement.

Two OCI specifications have emerged serving distinct contexts:

Docker Model Runner: targets desktop / local inference workflows; emphasizes GGUF-format models optimized for consumer hardware ergonomics.

CNCF ModelPack: targets enterprise production; supports multiple model formats (SafeTensors, ONNX, PyTorch) plus governance artifacts (attestations, SBOMs, policy bundles).

Should you adopt this now?

OCI artifacts for ML make operational sense today if you are:

Running Kubernetes-native infrastructure

Under regulatory or internal compliance pressure for model provenance

Distributing models across multiple teams or external partners

Already container-centric in your release and operations workflows

If none of these apply, keep your current workflows—S3 buckets and DVC remain perfectly valid.

If you’re evaluating adoption and want to compare implementation patterns—what’s working and where things still break—send a message below (or comment). If you’re building tooling in this space, I’d love to hear what you’re seeing.